- Dynamo and BigTable — good preso overview of two approaches to solving availability and consistency in the event of server failure or network partition.

- Goals Gone Wild (PDF) — In this article, we argue that the beneficial effects of goal setting have been overstated and that systematic harm caused by goal setting has been largely ignored. We identify specific side effects associated with goal setting, including a narrow focus that neglects non-goal areas, a rise in unethical behavior, distorted risk preferences, corrosion of organizational culture, and reduced intrinsic motivation.

- Tech Isn’t All Brogrammers (Alexis Madrigal) — a reminder that there are real scientists and engineers in Silicon Valley working on problems considerably harder than selling ads and delivering pet food to one another. (via Brian Behlendorf)

- Numbers from 90+ Gamification Case Studies — cherry-picked anecdata for your business cases.

"distributed" entries

Swarm v. Fleet v. Kubernetes v. Mesos

Comparing different orchestration tools.

Buy Using Docker Early Release.

Most software systems evolve over time. New features are added and old ones pruned. Fluctuating user demand means an efficient system must be able to quickly scale resources up and down. Demands for near zero-downtime require automatic fail-over to pre-provisioned back-up systems, normally in a separate data centre or region.

On top of this, organizations often have multiple such systems to run, or need to run occasional tasks such as data-mining that are separate from the main system, but require significant resources or talk to the existing system.

When using multiple resources, it is important to make sure they are efficiently used — not sitting idle — but can still cope with spikes in demand. Balancing cost-effectiveness against the ability to quickly scale is difficult task that can be approached in a variety of ways.

All of this means that the running of a non-trivial system is full of administrative tasks and challenges, the complexity of which should not be underestimated. It quickly becomes impossible to look after machines on an individual level; rather than patching and updating machines one-by-one they must be treated identically. When a machine develops a problem it should be destroyed and replaced, rather than nursed back to health.

Various software tools and solutions exist to help with these challenges. Let’s focus on orchestration tools, which help make all the pieces work together, working with the cluster to start containers on appropriate hosts and connect them together. Along the way, we’ll consider scaling and automatic failover, which are important features.

Introducing “A Field Guide to the Distributed Development Stack”

Tools to develop massively distributed applications.

Editor’s Note: At the Velocity Conference in Barcelona we launched “A Field Guide to the Distributed Development Stack.” Early response has been encouraging, with reactions ranging from “If I only had this two years ago” to “I want to give a copy of this to everyone on my team.” Below, Andrew Odewahn explains how the Guide came to be and where it goes from here.

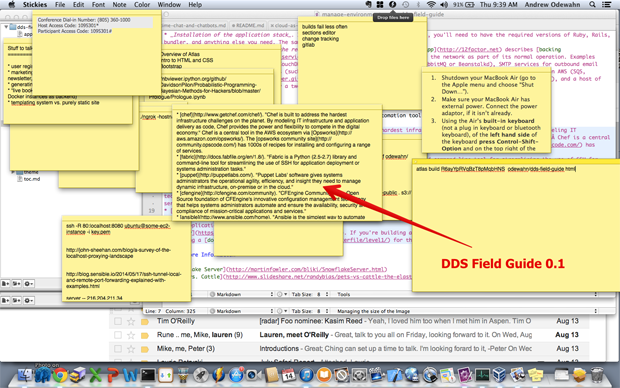

As we developed Atlas, O’Reilly’s next-generation publishing tool, it seemed like every day we were finding interesting new tools in the DevOps space, so I started a “Sticky” for the most interesting-looking tools to explore.

At first, this worked fine. I was content to simply keep a list, where my only ordering criteria was “Huh, that looks cool. Someday when I have time, I’ll take a look at that,” in the same way you might buy an exercise DVD and then only occasionally pull it out and think “Huh, someday I’ll get to that.” But, as anyone who has watched DevOps for any length of time can tell you, it’s a space bursting with interesting and exciting new tools, so my list and guilt quickly got out of hand.

Four short links: 20 June 2014

Available Data, Goal Setting, Real Tech, and Gamification Numbers

Everything is distributed

How do we manage systems that are too large to understand, too complex to control, and that fail in unpredictable ways?

“What is surprising is not that there are so many accidents. It is that there are so few. The thing that amazes you is not that your system goes down sometimes, it’s that it is up at all.”—Richard Cook

In September 2007, Jean Bookout, 76, was driving her Toyota Camry down an unfamiliar road in Oklahoma, with her friend Barbara Schwarz seated next to her on the passenger side. Suddenly, the Camry began to accelerate on its own. Bookout tried hitting the brakes, applying the emergency brake, but the car continued to accelerate. The car eventually collided with an embankment, injuring Bookout and killing Schwarz. In a subsequent legal case, lawyers for Toyota pointed to the most common of culprits in these types of accidents: human error. “Sometimes people make mistakes while driving their cars,” one of the lawyers claimed. Bookout was older, the road was unfamiliar, these tragic things happen. Read more…

Four short links: 2 April 2014

Fault-Tolerant Resilient Yadda Yadda, Tour Tips, Punch Cards, and Public Credit

- Resilient Distributed Datasets: A Fault-Tolerant Abstraction for In-Memory Cluster Computing (PDF) — Berkeley research paper behind Apache Spark. (via Nelson Minar)

- Angular Tour — trivially add tour tips (“This is the widget basket, drag and drop for widget goodness!” type of thing) to your Angular app.

- Punchcard — generate Github-style punch card charts “with ease”.

- Where Credit Belongs for Hack (Bryan O’Sullivan) — public credit for individual contributors in a piece of corporate open source is a sign of confidence in your team, that building their public reputation isn’t going to result in them leaving for one of the many job offers they’ll receive. And, of course, of caring for your individual contributors. Kudos Facebook.

Four short links: 7 March 2014

Distributed Javascript, Inclusion, Geek's Shenzhen Tourguide, Bitcautionary Tales

- Coalesce — communication framework for distributed JavaScript. Looking for important unsolved problems in computer science? Reusable tools for distributed anything.

- Where Do All The Women Go? — Inclusion of at least one woman among the conveners increased the proportion of female speakers by 72% compared with those convened by men alone.

- The Ultimate Electronics Hobbyists Guide to Shenzhen — by OSCON legend and Kiwi Foo alum, Jon Oxer.

- Bitcoin’s Uncomfortable Similarity to Some Shady Episodes in Financial History (Casey Research) — Bitcoin itself need serious work if it is to find a place in that movement long term. It lacks community governance, certification, accountability, regulatory tension, and insurance—all of which are necessary for a currency to be successful in the long run. (via Jim Stogdill)

Four short links: 6 March 2014

Repoveillance, Mobiveillance, Discovery and Orchestration, and Video Analysis

- Repo Surveillance Network — An automated reader attached to the spotter car takes a picture of every license plate it passes and sends it to a company in Texas that already has more than 1.8 billion plate scans from vehicles across the country.

- Mobile Companies Work Big Data — Meanwhile companies are taking different approaches to user consent. Orange collects data for its Flux Vision data product from French mobile users without offering a way for them to opt-out, as does Telefonica’s equivalent service. Verizon told customers in 2011 it could use their data and now includes 100 million retail mobile customers by default, though they can opt out online.

- Serfdom — a decentralised solution for service discovery and orchestration that is lightweight, highly available, and fault tolerant.

- Longomatch — a free video analysis software for sport analysts with unlimited possibilities: Record, Tag, Review, Draw, Edit Videos and much more! (via Mark Osborne)

Four short links: 4 June 2013

Distributed Browser-Based Computation, Streaming Regex, Preventing SQL Injections, and SVM for Faster Deep Learning

- WeevilScout — browser app that turns your browser into a worker for distributed computation tasks. See the poster (PDF). (via Ben Lorica)

- sregex (Github) — A non-backtracking regex engine library for large data streams. See also slide notes from a YAPC::NA talk. (via Ivan Ristic)

- Bobby Tables — a guide to preventing SQL injections. (via Andy Lester)

- Deep Learning Using Support Vector Machines (Arxiv) — we are proposing to train all layers of the deep networks by backpropagating gradients through the top level SVM, learning features of all layers. Our experiments show that simply replacing softmax with linear SVMs gives significant gains on datasets MNIST, CIFAR-10, and the ICML 2013 Representation Learning Workshop’s face expression recognition challenge. (via Oliver Grisel)

Four short links: 15 March 2012

Javascript STM, HTML5 Game Mashup, BerkeleyDB Architecture, and API Ontologies

- atomize.js — a distributed Software Transactional Memory implementation in Javascript.

- mari0 — not only a great demonstration of what’s possible in web games, but also a clever mashup of Mario and Portal.

- Lessons From BerkeleyDB — chapter on BerkeleyDB’s design, architecture, and development philosophy from Architecture of Open Source Applications. (via Pete Warden)

- An API Ontology — I currently see most real-world deployed APIs fit into a few different categories. All have their pros and cons, and it’s important to see how they relate to one other.